Okay, let’s be honest. Deepfakes used to sound like something out of a sci-fi movie, right? But here we are, smack-dab in the middle of a world where you can’t always trust your own eyes (or ears). It’s a little unsettling, to say the least. I mean, I remember when a blurry photo was enough to stir up conspiracy theories. Now? We’ve got flawlessly faked videos that can make world leaders say things they’d never actually say. That’s…a problem.

It’s not just about politics, either. Think about the potential for financial scams, ruined reputations, or even just good old-fashioned chaos. And honestly? The sheer scale of it is daunting. How do we even begin to sort through the digital noise and figure out what’s real and what’s not? I keep coming back to this point because it’s crucial. We need strategies, and fast.

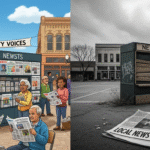

The Wild West of Synthetic Media

So, what exactly is a deepfake? Well, in simple terms, it’s a manipulated video or audio clip that uses AI to replace one person’s likeness with another. The tech behind it is getting more sophisticated all the time. It used to be fairly easy to spot the telltale signs – weird lip-syncing, unnatural blinking, that uncanny valley vibe. But now? The fakes are often so convincing that even experts struggle to tell the difference. And that’s the scary part.

But it’s also kind of amazing, isn’t it? I mean, the technology itself is incredible. It’s just…the implications. Think about it this way: we’re entering an era where video evidence, something we’ve always considered pretty reliable, can no longer be taken at face value. It’s like the rug’s been pulled out from under us. And honestly? It has real-world consequences. False information can spread like wildfire across social media, influencing public opinion and even inciting violence. Which is why gaming is a better way to use technology.

Why We Fall for It: The Psychology of Misinformation

Here’s the thing: it’s not just about the technology. It’s about us, too. Our brains are wired to take shortcuts, to believe what confirms our existing biases. That’s called confirmation bias, and it’s a powerful thing. We’re more likely to share a fake video that reinforces our beliefs, even if we don’t consciously realize it’s fake. And once something’s out there, it’s incredibly difficult to retract it. The internet never forgets, after all.

And then there’s the sheer volume of information we’re bombarded with every day. We’re constantly scrolling, skimming, and clicking, without taking the time to critically evaluate what we’re seeing. It’s cognitive overload. Which, makes us vulnerable. Let me try to explain this more clearly…we’re not actively trying to get duped. It’s more that our brains are simply overwhelmed, making us susceptible to misinformation.

Fighting Back: Strategies for Navigating the Deepfake Era

Okay, so what can we do about it? Is there any hope of winning this fight? I think there is, but it’s going to take a multi-pronged approach. First, we need better technology to detect deepfakes. Researchers are working on AI algorithms that can analyze videos and audio to identify subtle anomalies that indicate manipulation. But the deepfake creators are constantly improving their techniques, so it’s an ongoing arms race.

Education is key, too. We need to teach people how to spot the signs of a deepfake – unnatural movements, inconsistencies in lighting, weird audio distortions. Fact-checking organizations play a crucial role in debunking false information and holding creators accountable. But, and this is a big but, fact-checking alone isn’t enough. We need to encourage critical thinking skills and media literacy from a young age. How do we do that? Well, that’s a whole other conversation… In the meantime read about AI Generated News: Benefits and Concerns here: AI Generated News: Benefits and Concerns

And then there’s the legal and ethical side of things. Should there be laws against creating or sharing deepfakes that are intended to deceive or harm? It’s a tricky question, because we also need to protect freedom of speech and avoid unintended consequences. But it’s a conversation we need to have.

The Role of Social Media Platforms

Social media platforms, of course, have a massive responsibility here. They’re the main distribution channels for deepfakes and misinformation. And while some platforms have taken steps to combat the problem, it’s often not enough. They need to invest more resources in content moderation, fact-checking, and algorithm design. They also need to be more transparent about how they’re handling deepfakes and what steps they’re taking to protect their users. It is what it is, but they need to be more proactive, not reactive.

Ultimately, fighting deepfakes and misinformation is going to require a collective effort. It’s not just about the technology or the platforms or the laws. It’s about all of us. We need to be more critical consumers of information, more responsible sharers of content, and more engaged citizens of the digital world.

Navigating Truth in a World of Fabrications

The frustrating thing about all of this is the eroding effect on trust. We already live in a world where trust in institutions is declining. Deepfakes only exacerbate that problem. When people can’t trust what they see or hear, it becomes harder to have meaningful conversations or make informed decisions. And that’s a threat to democracy itself. But, all is not lost. Remember, humans are smart. With the right tools, education, and a healthy dose of skepticism, we can navigate this new reality and preserve the truth. Also, consider the work being done on Amazon GuardDuty here: Amazon GuardDuty

FAQ: Deepfakes and Misinformation in the Digital Age

How can I tell if a video is a deepfake?

That’s the million-dollar question, isn’t it? While there’s no foolproof method, there are a few things to look out for. Pay attention to the person’s face – are there any unnatural movements or distortions? Does the lighting seem inconsistent? Does the audio sync up perfectly with the video? If something feels off, it probably is. Cross-reference the video with other sources to see if it’s been verified by reputable news organizations or fact-checking sites.

What is the main problem when Deepfakes and Misinformation are spread?

Think about the damage a convincing fake video could do. Reputations ruined, elections swayed, even violence incited. The real problem, though, is the erosion of trust. If we can’t trust what we see and hear, it becomes harder to have meaningful conversations or make informed decisions. And that’s a slippery slope.

Aren’t deepfakes just harmless fun?

Sometimes, yes! There are plenty of deepfakes out there that are purely for entertainment purposes – think movie parodies or funny celebrity impersonations. But it’s important to distinguish between harmless fun and malicious intent. When deepfakes are used to spread misinformation or deceive people, that’s when they become a serious problem. Context matters.

What are social media platforms doing to combat deepfakes?

Some platforms are investing in AI technology to detect and remove deepfakes. Others are partnering with fact-checking organizations to debunk false information. And some are simply relying on user reporting to flag suspicious content. But honestly? It’s a constant game of cat and mouse. The deepfake creators are always finding new ways to circumvent the safeguards, so the platforms need to stay one step ahead.