Okay, let’s be honest. When I first heard about AI writing news articles, my initial thought was, “Great, another reason for me to drink more coffee!” (And believe me, I love my coffee.) But after diving into it, I’ve got to admit, this is way more nuanced than I initially thought. It’s not just about robots stealing jobs – though that’s a valid concern. It’s about the very nature of information itself, and how quickly (and accurately) it reaches us.

We live in an age of information overload. News cycles are 24/7, and everyone’s scrambling to be first. But, is being first always best? Think about it this way: you wouldn’t want a doctor diagnosing you at lightning speed if it meant missing something important, right? And the same goes for news, I’d argue.

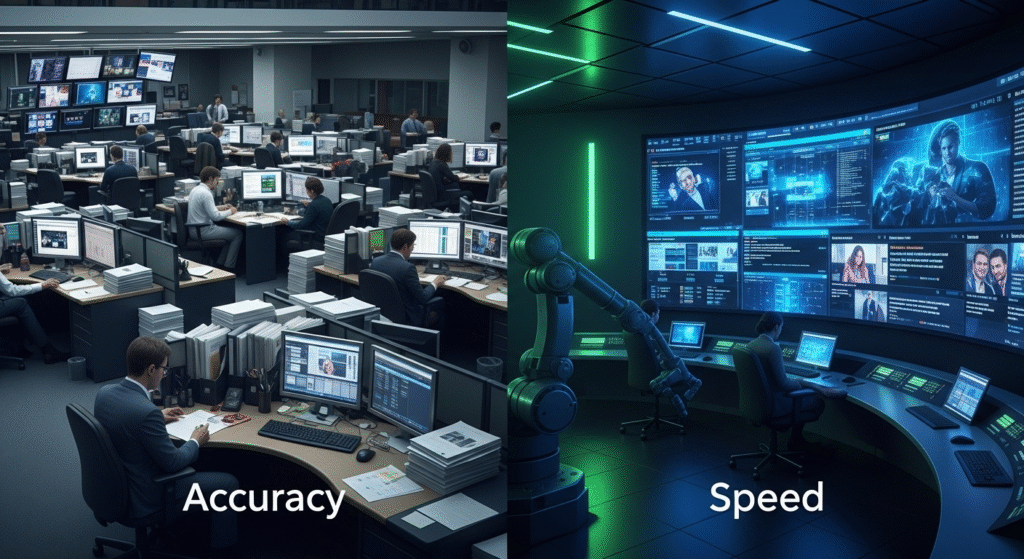

The Allure of Speed: AI’s Advantage in News Generation

Here’s the thing: AI can churn out articles at an astonishing pace. We’re talking about taking raw data – sports scores, financial figures, weather reports – and instantly transforming it into coherent prose. This is perfect for routine reports, where speed is paramount. You need the earnings reports now? AI’s got you covered. And actually this type of lightning fast reporting also allows for a broader range of data and niche topics to be covered. It makes you wonder if in the long run this can address issues with coverage such as local news deserts. One can hope.

But, wait, there’s more! AI doesn’t get tired. It doesn’t need coffee (unlike some of us). It can work tirelessly, producing a steady stream of content, freeing up human journalists to focus on more complex, investigative work. In theory, at least.

Accuracy Under the Microscope: Where AI Stumbles

And that leads us to the big question: Accuracy. Can we really trust AI to get it right? Well… it’s complicated. AI is only as good as the data it’s fed. If the data is flawed, the output will be flawed. Garbage in, garbage out, as they say. I initially thought errors were rare but then came across several reports that the tech companies behind these tools were releasing fixes regularly due to errors.

Furthermore, AI struggles with nuance, context, and critical thinking. It can report what happened, but it often misses why it happened. It can’t conduct interviews, analyze complex situations, or bring a human perspective to the story. This means AI-generated news can be bland, generic, and even misleading. And, perhaps more importantly, susceptible to manipulation. Imagine someone feeding it biased data – the consequences could be pretty serious.

Think about it this way: AI can write a perfectly grammatically correct sentence about a political event, but it can’t understand the underlying political tensions or the potential impact on real people. It’s all surface, no depth. Which, in my opinion, makes it a tool, not a replacement for human journalists.

Ethical Considerations: Transparency and Trust

This is where things get really interesting. Should AI-generated news be labeled as such? Absolutely! Transparency is crucial. Readers have a right to know whether they’re reading something written by a human or a machine. Otherwise, we risk eroding trust in the media as a whole. The frustrating thing about this whole area is how quickly it’s evolving and how slowly our ethical frameworks are adapting. Is it news if it gets the details correct but is created in a biased manner? Where does the line get drawn?

And that’s where the human element comes back in. We need human editors to oversee AI-generated content, fact-check its accuracy, and ensure it meets ethical standards. We need human journalists to provide context, analysis, and perspective. We need to play these AI news games too! It is worth seeing the content that is already out there. AI can be a powerful tool, but it’s just that – a tool. It’s up to us to use it responsibly.

FAQ: Your Questions About AI and News Answered

How do I know if I’m reading an AI-generated news article?

That’s a tricky one! Right now, most reputable news organizations are being transparent about using AI, but not all are. Look for clues like overly simplistic language, a lack of human perspective, and a focus on data rather than analysis. If something feels “off,” it might be AI-generated. And when in doubt, check the source! Read the about section or look up a reporter profile to check it is a real person. Also make sure to practice critical thinking to see if the content has gaps.

Can AI-generated news be biased?

Absolutely! AI learns from the data it’s trained on, and if that data reflects existing biases, the AI will perpetuate them. It can also be deliberately manipulated to promote a specific agenda. Always be aware of the potential for bias and seek out diverse sources of information.

Is AI going to replace human journalists?

Probably not entirely. While AI can automate certain tasks, it can’t replace the critical thinking, creativity, and ethical judgment of human journalists. More likely, we’ll see a hybrid model where AI assists journalists in their work, freeing them up to focus on more complex and investigative reporting, as well as tasks such as climate change coverage balancing urgency with hope.

What are the benefits of AI in news?

The biggest benefit is speed. AI can quickly generate reports on routine events, allowing news organizations to cover a wider range of topics and deliver information to audiences faster. This can be especially useful for covering local news or providing real-time updates on developing stories. So while there are concerns, there are also benefits. It’s all about finding the right balance.

Ultimately, the rise of AI-generated news is a double-edged sword. It offers incredible potential for speed and efficiency, but it also raises serious questions about accuracy, ethics, and trust. It’s up to us, as consumers of news, to be critical thinkers and demand transparency. And it’s up to the media industry to use AI responsibly and ethically. The future of news depends on it.